This blog post is to bring more awareness to the users of Gluster community of a feature which has been around for a while since GlusterFS 4.0 and has helped immensely in certain deployments which needed a better scaling support w.r.t number of Gluster volumes. Gluster development community has made this feature quite stable over the last few releases and based on our internal testing and reports from customers this feature has benefited in having a 3 node cluster hosting over thousand volumes. Most part of this blog entry where we explain the details of this feature and it’s benefit are being borrowed from the notes shared by Jeff Darcy who is the primary author of this feature.

“Brick multiplexing” is a feature that allows multiple bricks to be served from a single glusterfsd process. This promises to give us many benefits over the traditional “process per brick” approach:

- Lower total memory use, by having only one copy of various global structures instead of one per brick/process.

- Less CPU contention. Every glusterfsd process involves several threads. If there are more total threads than physical cores, or if those cores are also needed for other work on the same system, we’ll thrash pretty badly. As with memory use, managing each thread type as a single pool (instead of one pool per brick/process) , shared global data structures help.

- Fewer ports. In the extreme case, we need only have one process and one port per node. This avoids port exhaustion for high node/brick counts, and can also be more firewall-friendly.

- Better coordination between bricks e.g. to implement QoS policies.

When to use brick multiplexing:

If you are running a deployment where you’d need to host hundreds of volumes with hundreds of bricks hosted by a single node.

How to use brick multiplexing:

“cluster.brick-multiplex” is a global option which needs to be turned on to enable brick multiplexing feature. Please note, you’d need to restart the volumes or restart the brick processes (pkill gluster; glusterd) one node at a time to make the bricks adhere to this tuning. To enable brick multiplex one needs to run the following command:

gluster volume set all cluster.brick-multiplex on

Also note, that there exists one more global option “cluster.max-bricks-per-process” where the default value is set to 250 defines the max number of brick instances to be running with a brick process.

How brick multiplexing works:

When glusterd attempts to start a volume when brick multiplexing is enabled, for every brick instance glusterd sends an RPC request to an existing brick process to attach the new brick instance instead of forking a new process altogether. Similarly, when a volume is stopped, the respective bricks belonging to this volume are detached from their respective parent brick processes which is again done through a brick op RPC request initiated by glusterd. The compatibility of a brick to be attached to a particular brick process is determined by checking the volume options of their respective volumes, if there’s any discrepancy on the list of volume options between these two volumes the bricks are identified as not compatible and the next brick process is scanned to check this criteria. So it’s very much possible that you’d see a new brick process spawning in brick multiplexing mode if a new volume having unique list of options are being configured and is being started.

Brick Graph construction:

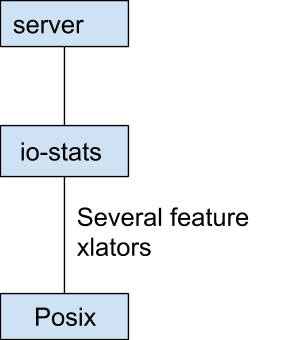

- Non brick multiplexed graph:

Every brick process maintains a similar linear graph structure with server translator at the top and posix being the bottom most translator in the stack. In this graph io-stat xlator saves brick name along with inode

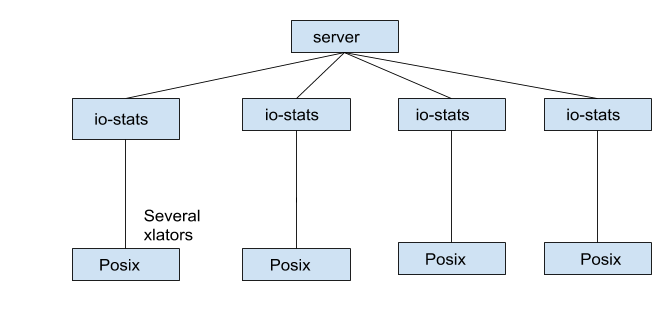

2. Brick Multiplex Graph:

- Following diagram depicts a brick graph having 3 additional brick instances attached to a single parent brick process

Logging changes:

One of the differences one will have in the logging when using brick multiplexing is that instead of maintaining individual brick log files for every brick instance, only one log file for the parent brick will be maintained.

How to find a parent brick and it’s log file for respective brick instances:

There are two ways to find a parent brick for a specific volume:

1) Run gluster v status command, it prints pid of a specific brick

OR

2) Search brick name in already running glusterfsd processes like below

for pid in `pgrep glusterfsd`

do

val=`ls -lrth /proc/$pid/fd | grep <brick-name>`

if [ ! -z “$val” ]

then

echo “pid is $pid”

fi;

done

It will print the parent brick process from that brick was attached. To check the log file of specific brick check the argument of the specific parent process like below

ps -aef | grep $pid

An argument starts with -l in process command line represents a log file location of the parent brick, the same log file retains messages of all attached brick with specific pid.

Memory consumption observation:

Created a 3 nodes Gluster cluster and configured 250 replica 3 (1 X 3) volumes with default options and analyze the memory usage for all volumes in both cases:

First, measuring the memory consumption without enabling brick multiplex

val=0

for pid in `pgrep glusterfsd`; do mem=`pmap -x $pid | grep total | awk -F ” ” ‘{print $4}’`;

val=$((val+mem)); done

echo $val

4628892

Now measuring memory consumption with enabling brick multiplex

val=0

for pid in `pgrep glusterfsd`; do mem=`pmap -x $pid | grep total | awk -F ” ” ‘{print $4}’`;

val=$((val+mem)); done

echo $val

1367828

As we can see from this quick test, with brick multiplexing overall memory consumption has been one third compared to non brick multiplexing mode.

So isn’t that cool? So please don’t hesitate to give it a try and provide feedback of how brick multiplex helps in your deployment when you’d want Gluster to manage your scaling worries for number of volumes.